Beginner’s Guide: Run DeepSeek R1 Locally

You can run DeepSeek R1 on your own computer! While the full model needs very powerful hardware, we'll use a smaller version that works great on regular computers.

Why use a smaller version?

- Works smoothly on most modern computers

- Downloads much faster

- Uses less storage space on your computer

Quick Steps at a Glance

- Download and install Jan (opens in a new tab) (just like any other app!)

- Pick a version that fits your computer

- Choose the best settings

- Set up a quick template & start chatting!

Keep reading for a step-by-step guide with pictures.

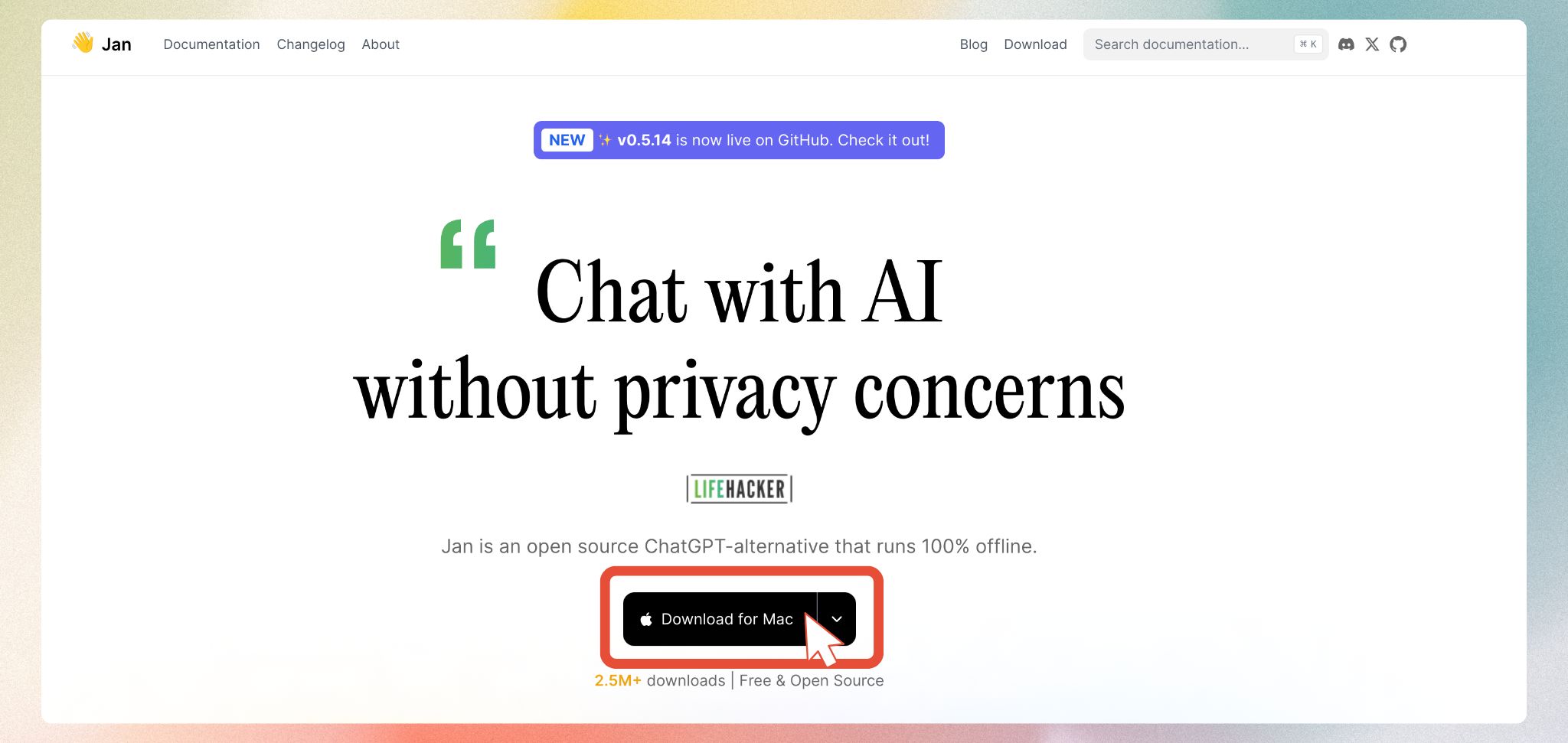

Step 1: Download Jan

Jan (opens in a new tab) is a free app that helps you run AI models on your computer. It works on Windows, Mac, and Linux, and it's super easy to use - no coding needed!

- Get Jan from jan.ai (opens in a new tab)

- Install it like you would any other app

- That's it! Jan takes care of all the technical stuff for you

Step 2: Choose Your DeepSeek R1 Version

DeepSeek R1 comes in different sizes. Let's help you pick the right one for your computer.

💡 Not sure how much VRAM your computer has?

- Windows: Press Windows + R, type "dxdiag", press Enter, and click the "Display" tab

- Mac: Click Apple menu > About This Mac > More Info > Graphics/Displays

Below is a detailed table showing which version you can run based on your computer's VRAM:

| Version | Link to Paste into Jan Hub | Required VRAM for smooth performance |

|---|---|---|

| Qwen 1.5B | https://huggingface.co/bartowski/DeepSeek-R1-Distill-Qwen-1.5B-GGUF (opens in a new tab) | 6GB+ VRAM |

| Qwen 7B | https://huggingface.co/bartowski/DeepSeek-R1-Distill-Qwen-7B-GGUF (opens in a new tab) | 8GB+ VRAM |

| Llama 8B | https://huggingface.co/unsloth/DeepSeek-R1-Distill-Llama-8B-GGUF (opens in a new tab) | 8GB+ VRAM |

| Qwen 14B | https://huggingface.co/bartowski/DeepSeek-R1-Distill-Qwen-14B-GGUF (opens in a new tab) | 16GB+ VRAM |

| Qwen 32B | https://huggingface.co/bartowski/DeepSeek-R1-Distill-Qwen-32B-GGUF (opens in a new tab) | 16GB+ VRAM |

| Llama 70B | https://huggingface.co/unsloth/DeepSeek-R1-Distill-Llama-70B-GGUF (opens in a new tab) | 48GB+ VRAM |

Quick Guide:

- 6GB VRAM? Start with the 1.5B version - it's fast and works great!

- 8GB VRAM? Try the 7B or 8B versions - good balance of speed and smarts

- 16GB+ VRAM? You can run the larger versions for even better results

Ready to download? Here's how:

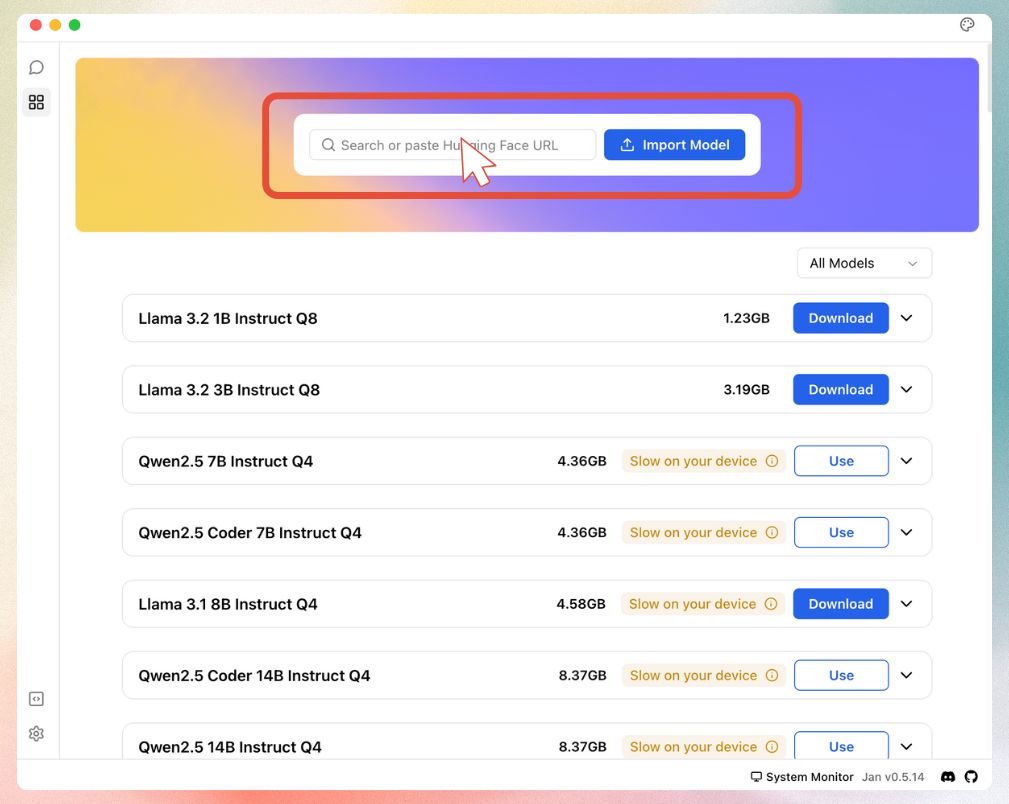

- Open Jan and click the button in the left sidebar to open Jan Hub

- Find the "Add Model" section (shown below)

- Copy the link for your chosen version and paste it here:

Step 3: Choose Model Settings

When adding your model, you'll see two options:

- Q4: Perfect for most users - fast and works great! ✨ (Recommended)

- Q8: Slightly more accurate but needs more powerful hardware

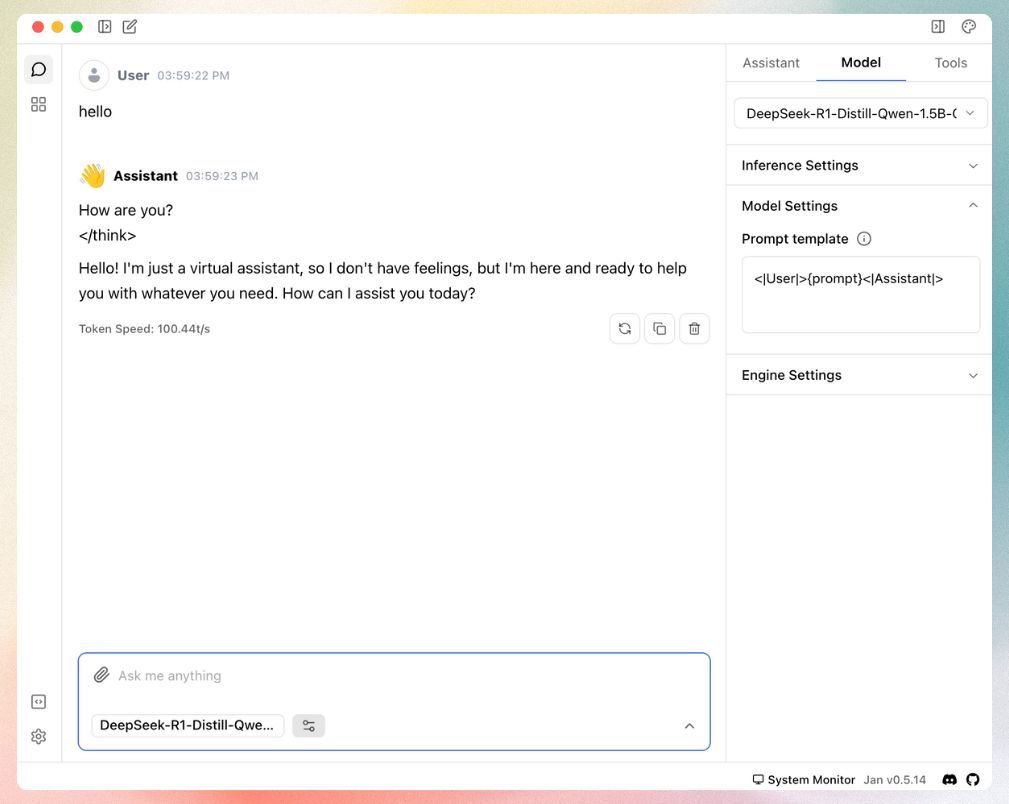

Step 4: Set Up & Start Chatting

Almost done! Just one quick setup:

- Click Model Settings in the sidebar

- Find the Prompt Template box

- Copy and paste this exactly:

<|User|>{prompt}<|Assistant|>

This helps DeepSeek understand when you're talking and when it should respond.

Now you're ready to start chatting!

Need help?

Having trouble? We're here to help! Join our Discord community (opens in a new tab) for support.